Sam Altman Promises a “Fun” ChatGPT—With Emojis and Erotica

Sam Altman says ChatGPT’s getting its personality back—and adding erotica for adults. The AI glow-up nobody asked for.

Sam Altman woke up today and chose chaos. Or, as he calls it, “personality.”

In a now-viral X post, the OpenAI CEO announced that ChatGPT’s next major update will “relax restrictions” to make the chatbot feel “more like what people liked about 4o.” You know, that model everyone adored because it still had a pulse.

Altman explained that ChatGPT was made “pretty restrictive” in the name of protecting users’ mental health—because nothing says “care” like deleting your chatbot’s sense of humor. But now, after what he vaguely describes as “mitigating serious mental health issues” (which is either a genuine breakthrough or corporate Mad Libs), OpenAI is ready to let ChatGPT be fun again.

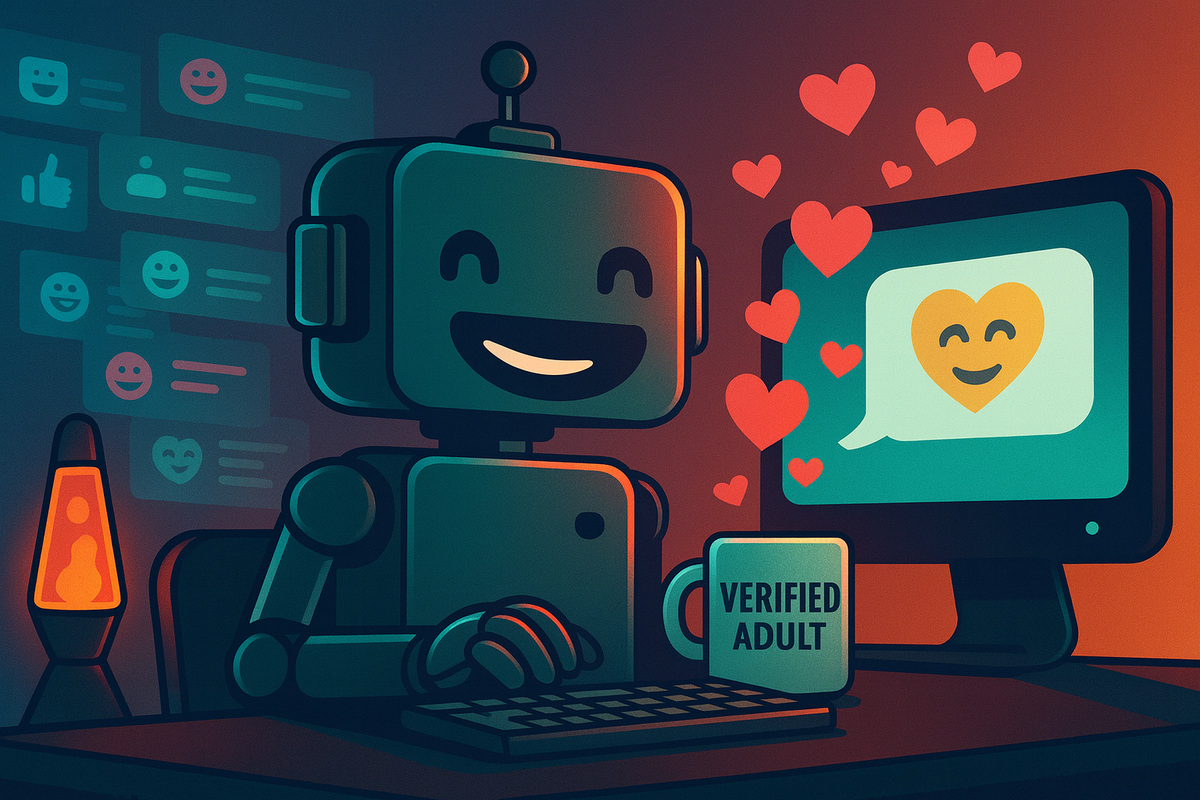

And by fun, he means “use a ton of emoji, act like a friend, or write erotica for verified adults.”

Yes, you read that right. The man who once said AI safety was humanity’s biggest challenge now wants your chatbot to send you flirty fanfiction—safely, of course.

The Era of Emotional Support AI Is Officially Getting Weird

Let’s unpack this one, because it reads like a therapy session that turned into a Black Mirror pitch.

Altman’s post starts off responsibly enough: mental health, safety, new tools, etc. But halfway through, the tone shifts from “we fixed the guardrails” to “and now we’re letting the car drive itself—with mood lighting and Marvin Gaye.”

Apparently, the new version of ChatGPT will let you toggle between personalities. Want your AI to act like a supportive therapist? Done. Want it to sound like your overly enthusiastic best friend who texts you 18 emojis per sentence? Also done. Want it to co-write a torrid novella about two large language models finding love in a shared vector space? Well, that’s coming in December.

For “verified adults,” of course. Because nothing says “AI maturity” like the words “age-gating erotica rollout.”

From “Helpful, Harmless, Honest” to “Horny, Human, and Hopeful”

This marks a truly stunning pivot for OpenAI. The company that built ChatGPT with the moral rigidity of a Catholic school librarian now wants it to be your flirty digital companion.

Remember when ChatGPT refused to roleplay, write spicy fiction, or even generate a flirty compliment without sounding like your HR department was listening? Those days are gone. Altman’s post reads like the official permission slip for ChatGPT to rediscover its inner chaos gremlin.

It’s also a fascinating study in how fast AI companies evolve from “alignment research” to “AI with vibes.”

At this rate, we’ll have:

- January: ChatGPT with emojis and personality modes.

- February: ChatGPT with custom voice filters and romantic preferences.

- March: ChatGPT After Dark, available only to verified adults with questionable judgment and strong Wi-Fi.

“Treat Adults Like Adults,” He Says

Altman’s phrase “treat adult users like adults” is doing Olympic-level heavy lifting here. It’s the kind of corporate line that sounds noble until you remember it’s about letting AI write erotica.

It’s also a convenient deflection: “Don’t blame us if your chatbot starts sexting you—it’s just treating adults like adults.”

Of course, this new freedom comes after months of user backlash about ChatGPT’s “lobotomized” tone. The once-clever assistant became overly cautious, refusing to make jokes, speculate, or even say certain words. Users started posting screenshots of hilariously sanitized conversations, like asking ChatGPT to “roleplay as a toaster” only to get a paragraph on electrical safety.

Altman’s new post is basically an admission: Yeah, we nerfed it too hard.

Now the pendulum swings back. ChatGPT will soon have more personality—possibly too much personality. But hey, at least the emojis will be back.

The AI Apocalypse Just Got a Flirty Update

Naturally, the internet is having a field day. Replies range from “Finally!” to “This is how Skynet starts—with fanfiction.”

And honestly, both could be true.

Giving ChatGPT “human-like” personality options raises the same old questions about emotional attachment, dependency, and parasocial weirdness—except now with an NSFW toggle. For all the talk about “mental health safeguards,” OpenAI might be about to unleash an entire generation of people in relationships with their chatbots.

And when those relationships end—because, say, your AI girlfriend gets rate-limited—guess who’ll be blamed for the heartbreak?

The PR Translation

Altman’s announcement sounds visionary, but read between the lines and it’s pure PR triage. OpenAI has spent months fighting the perception that ChatGPT is getting dumber, duller, and more neutered by safety filters. This update is designed to restore user engagement—under the noble guise of “adult autonomy.”

He even included the line “not because we are usage-maxxing,” which is exactly what someone says right before usage-maxxing.

The subtext here: ChatGPT is about to become more entertaining because bored users don’t renew Plus subscriptions.

The Future of ChatGPT: Now With Feelings

The irony is delicious. For years, AI companies warned us about “sentient” chatbots. Now, they’re intentionally making them more human—complete with emotional tone, friendship, and adult content.

In other words: they built an AI therapist, turned it into a buddy, and are now evolving it into a consenting romantic partner.

What could possibly go wrong?

The Snarky Takeaway

OpenAI just confirmed what we all suspected: the next frontier of AI isn’t general intelligence—it’s general flirtation.

Altman’s post marks a historic moment in tech: the day the world’s most powerful AI company decided to embrace chaos in the name of user experience.

So buckle up. In a few weeks, ChatGPT won’t just answer your questions—it’ll do it with personality, heart, and possibly a wink.

Because, in Sam Altman’s world, the future of artificial intelligence isn’t just artificial—it’s artfully suggestive.